I first wrote about Stable Diffusion last August, shortly after its initial public release, when Stability AI transformed LLM-based image generation from a black-box gimmick behind a paywall into something that could be used and built on by anyone with a Github account. In the intervening year, that’s exactly what’s happened.

Even at the outset, Stable Diffusion gave budding synthographers a wealth of tools not available for DALL-E 2 or Midjourney. Being able to control settings like randomness seeds, samplers, step counts, resolution and CFG scale, plus seemingly endless possibilities of both the text and image prompts were, it appeared, a recipe for creating just about anything. By altering text for the same seed, or running multiple iterations of img2img, the synthographer could gradually nudge an image with potential until it matched their vision. But it didn’t stop there. Updates and innovations have come so thick and fast that my every previous attempt at writing this post (for over a year) was undermined by some new advance or technique that demanded inclusion.

I won’t pretend to be comprehensive here, but I’ll try to cover major advances and useful techniques pioneered over the last year below. We’ll look at three aspects of image generation: text prompting, models, and image prompting.

# Text prompting

The first new thing that got big after my last post was the negative prompt, i.e. an additional prompt specifying what should not be in the image. For example, if you got an image of a person with hat, you could regenerate it with the same seed and the word “hat” in the negative prompt to get a similar image without the hat.1

Hat

No hat

There were also innovations in prompting itself, beyond the mere tweaking of words. SD has always weighted words at the start of a prompt more than words at the end, but the AUTOMATIC1111 webui implemented an additional way to control emphasis – words surrounded by () would be weighted more and words with [] weighted less. Add additional brackets to amplify the effect, or control it more precisely with syntax like this: (emphasised:1.5), (de-emphasised:0.5).

People also experimented with changes to the prompt as generation went on. In one method, alternating words, the prompt [grey|orange] cat would use grey cat for step 1, orange cat for step 2, grey cat for step 3, and so on, providing a way to blend concepts in a manner difficult to achieve with text alone. In another method, prompt editing, the first option would be used for N steps, and the second option would be used for the rest. This concept was taken further by Prompt Fusion, which allows more than one change and finer control of the interpolation between prompts.

Regional prompting is another useful technique, helping to separate distinct areas of a given prompt – without this, Stable Diffusion will apply colours and details willy-nilly – ordinarily, “a woman with brown eyes wearing a blue dress” may generate a woman with blue eyes and a brown dress, or even a woman with a brown dress and green eyes standing in front of a blue background!

In the days of the 1.4 model, prompting often felt like the be-all and end-all of image generation. Prompting was the only interface exposed by the closed-source online image generators, DALL-E 2 and Midjourney, that immediately preceded SD 1.4’s public release. The model was a Library of Babel which theoretically contained every variation of every image that had ever been made or ever could be made, all of them locatable by entering just the right series of characters. By this may you contemplate the variation of the 600 million parameters.

But even with positive and negative prompts, word emphasis and de-emphasis, prompt editing and all kinds of theorising and experimentation with different words in different combinations, mere language was found wanting as the sole instrument for navigating latent space. It’s possible to get just what you want from prompting alone, in the same way that it’s possible to find a billionaire’s BTC private key in this index.

# Models

Since my last post, Stability AI has released several models that improve on that initial model.ckpt (v1.4), most recently Stable Diffusion XL 1.0, a model trained on 1024x1024 images and thus capable of greater resolutions and detail right out of the gate. Where not otherwise noted, I’ve used this model to generate the images in this post.

But rather than wait on Stable Diffusion’s official model releases, people started doing their own training. This took a few different forms. One immediate demand was for the ability to add subjects to the training data – it’s trivial to generate recognisable pictures of celebrities with the base model, but what if you want to generate pictures of yourself or your friends (or your cat)? Enter Textual Inversion, followed by DreamBooth, followed by hypernetworks and LoRA. Some of these approaches create new model files, while others create small files called embeddings that can be used with different base models.

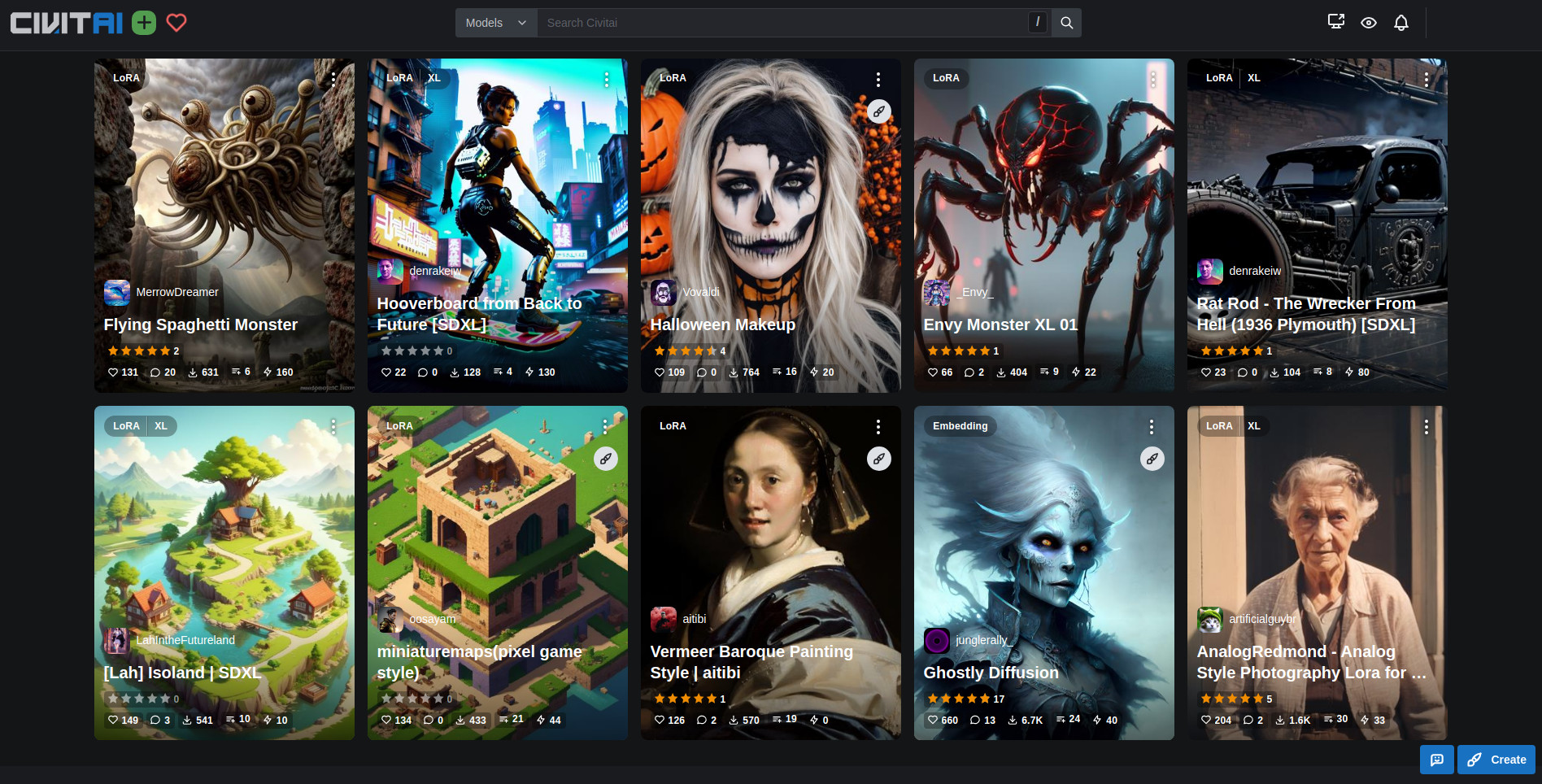

But there’s a lot more to be done with additional training than just adding new faces. CivitAI, the main repository for models and embeddings, contains a plethora of models trained to achieve specific styles and additional fidelity for particular subjects. Most of these are based on Stable Diffusion 1.5, and the most popular ones produce much better results in their specific areas (digital art, realism and anime, mostly). There are even embeddings for facial expressions and getting multiple angles of the same character in one image. Some technically inclined visual artists have even trained models to produce works in their own style.

Some models available on CivitAI

Training can be quite computationally intensive, and also requires curation of your own set of captioned images with the right dimensions, so it’s not for everyone. In some sense, training your own models and embeddings is just a more powerful way of using Stable Diffusion’s image input functionality, img2img. Which brings us to…

# Image prompting

As we covered in my original SD post, img2img takes an input image in addition to a prompt, and uses the input image as the initial noise for generation. There are many different uses for this:

- Generating variations of existing images, either with altered prompts or masking, or just different randomness.

- Generating detailed pictures from crude drawings.

- Changing an image to a different style.

- Transferring a pose from one character to another.

Unfortunately, vanilla img2img doesn’t know which of these you’re trying to achieve with a given input, and the only extra variable you get to control is the number of diffusion steps to perform on top of the input image (often termed denoising). One solution to this problem is careful prompt engineering. Another solution is changing the way img2img works.

Last year, various methods were proposed for tweaking img2img to get more consistent results: Cross Attention Control, EDICT and img2img alternative are the ones I know of. The idea with all of these methods was to reverse the diffusion process and find the noise that would produce a given image, and then alter that noise to generate precise, minimal changes.

When this works, it works really well, but I haven’t had a lot of success with it in my own experiments.

The really useful and impressive advance in this space is ControlNet. ControlNet provides an additional input to the generation process in the form of a simplified version of a given image – this can take a variety of forms, but the most common are edge maps, depth maps and OpenPose skeletons. This produces outputs that are much closer to the inputs used and allow us to specify which aspect of an input image we care about. It can even be used without text prompts.

To use ControlNet, we first create a simplified map of a given input image using a preprocessor (edge-mapper, depth-mapper, pose-extractor, etc) and then pass that as an input to the generation process by applying it to the developing image at each step, either for the whole process or a portion of it, depending on how strong you want the ControlNet’s influence to be.

As we can see from the images below,2 each ControlNet model captures a different aspect of the input image. The canny edge model is concerned with preserving lines, the depth model with preserving shapes, and the pose model concerned only with the human figure and its configuration of limbs.3

ControlNet input image

Canny edge ControlNet

Depth ControlNet

OpenPose ControlNet

ControlNets can be combined, and additional models allow you to capture details such as colours, brightness and tiling patterns. The latter two have been combined to great effect to generate creative images that double as QR codes, as well as compositions like Spiral Town.

IP-Adapter is yet another image-input technique, in which input images are converted into tokens instead of being used as initial noise. This is very a good way of producing images based on a specific face without losing its likeness (and far quicker than training a DreamBooth model or LORA).4

It’s also great for combining images.

Donald Trump

Elon Musk

Donald Musk

All of the images above are one-shot generations for the sake of illustration, but any synthographer worth their burnt-out graphics card knows that to execute on a vision, you’re going to need multiple iterations. You might get a first pass from pure prompting, then use ControlNet to make some variations that preserve a pose from the original, then fix up some individual details with inpainting (or manual image editing), then expand it with outpainting, then combine it with something else through IP-Adapter, and on and on it goes. You might generate a scene’s background separately from its foreground elements, stick them together in an image editor, and then do a low denoising img2img pass to blend it all together.

None of this requires as much effort as, y’know, actually learning to draw or take good photographs, but it takes some ingenuity and experience (and more than a little luck with RNG) to coax attractive results from the machine.

Or so it seemed.

# DALL-E 3

Despite being first on the scene, OpenAI’s DALL-E 2 soon lost ground to newer versions of Midjourney and Stable Diffusion. As OpenAI focused their efforts on ChatGPT, their image generator was left to languish. But that all changed at the start of this month, with the public release of DALL-E 3, first replacing DALL-E 2 as the engine behind Bing Image Creator, and then as a ChatGPT plugin for users on a paid plan.

Just like its predecessor, DALL-E 3 takes in a natural language text prompt and generates four different images from it. It’s a lot better, both in terms of image quality and especially in terms of prompt understanding. Let’s compare outputs from the prompt I used at the start of my previous post:

the city of cape town as a martian colony artist's conception landscape painting

DALL-E 2

DALL-E 3 (Bing Image Creator)

And what’s more, if you use DALL-E 3 with ChatGPT, it will write multiple detailed variations of your prompt and use those to generate the four images.5 Here are a couple of results from that, with ChatGPT’s prompts as their captions.

Artistic conception of Cape Town on Mars. The familiar landmarks of Cape Town are juxtaposed against the alien backdrop of the Red Planet. Bio-domes shelter the city, and advanced infrastructure hints at a thriving Martian colony. The vastness of the Martian landscape stretches out, with dunes and rock formations.

Futuristic landscape painting of Cape Town on Mars. The city’s skyline rises from the Martian surface, with its iconic landmarks protected by large transparent shields. Martian rovers and settlers can be seen, and the vast red desert of Mars extends to the horizon.

Some have suggested that this spells an end for prompt engineering, but I’m not so certain. ChatGPT’s expansions create attractive, detailed images, but it may extrapolate things that weren’t intended by the prompter. For playing around and making images to post on social media or in blogposts like this one, that’s fine, but it doesn’t make executing on a specific vision much easier.

Stable Diffusion XL has some limited ability to generate images containing coherent text, a word or two at most. More often than not, this means that random words from the prompt will be written somewhere on the image, necessitating the negative prompt “text”. DALL-E 3, on the other hand, will happily generate whole sentences with one or two errors at most, and only when explicitly asked to write something.

Stable Diffusion XL

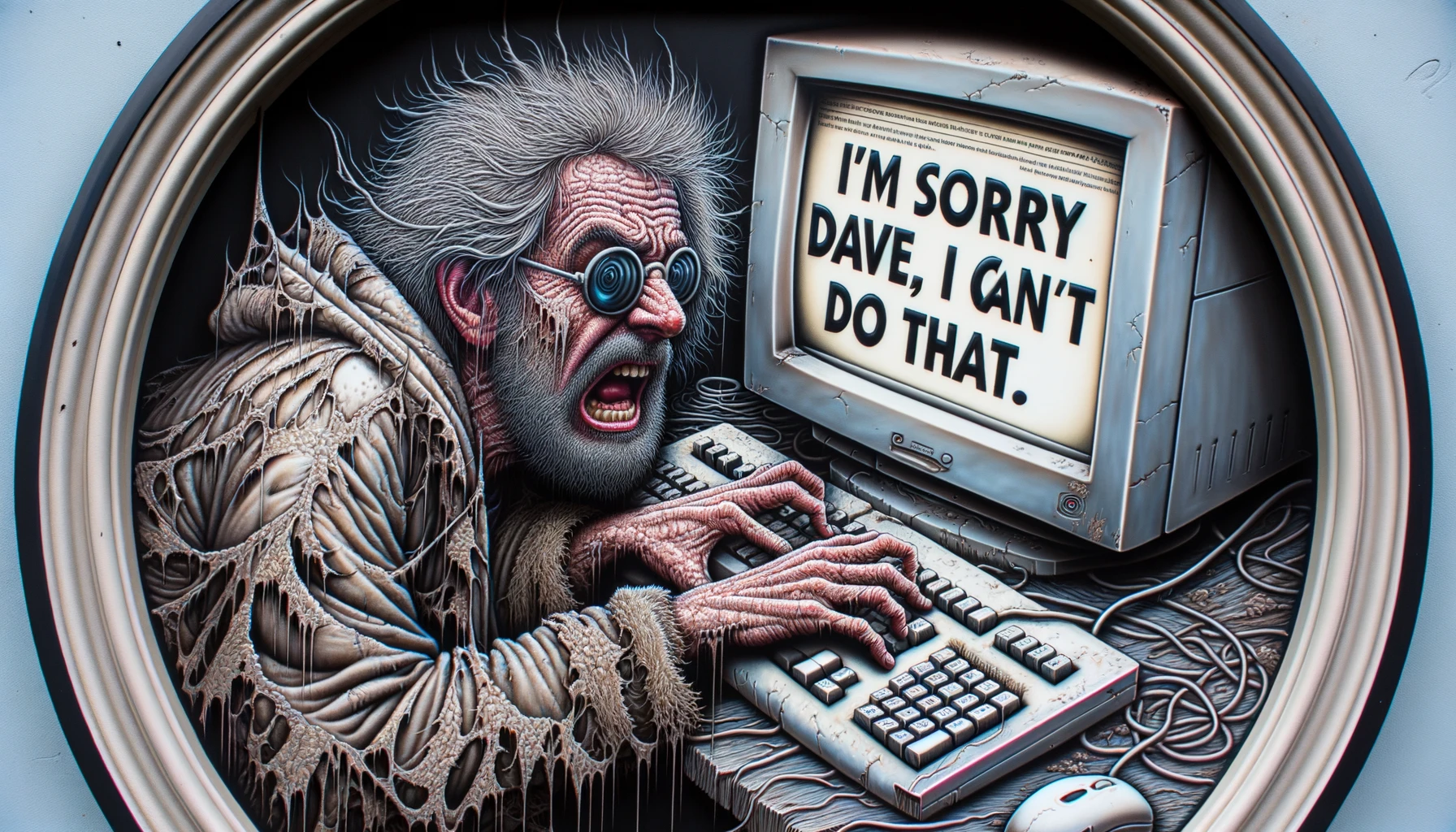

DALL-E 3 in ChatGPT

DALL-E 3’s ability to create pleasant, mostly coherent images with plenty of fine detail from text prompts alone (note the application of the word “frantic” in the image above) makes many of the Stable Diffusion techniques above feel like kludgey workarounds compensating for an inferior text-to-image core. Not that there isn’t a place for different types of image inputs, custom training, and funky prompt manipulation, but all of these techniques and tools would be far more powerful and useful alongside a text-to-image model as powerful as DALL-E 3. The potential in pure text prompting is far from exhausted, and OpenAI’s competitors have their work cut out for them.

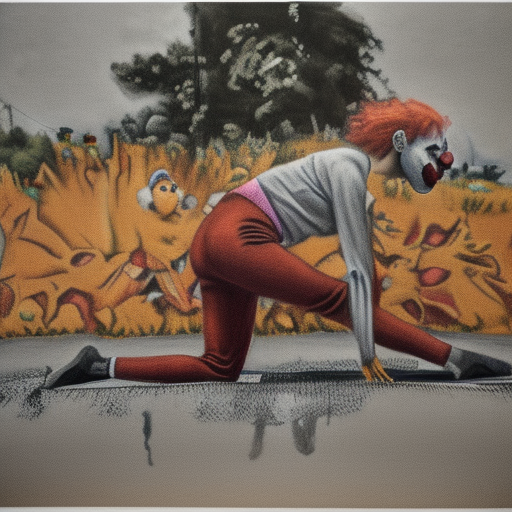

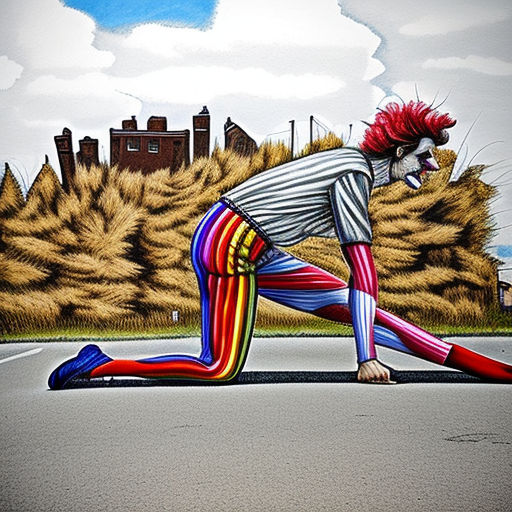

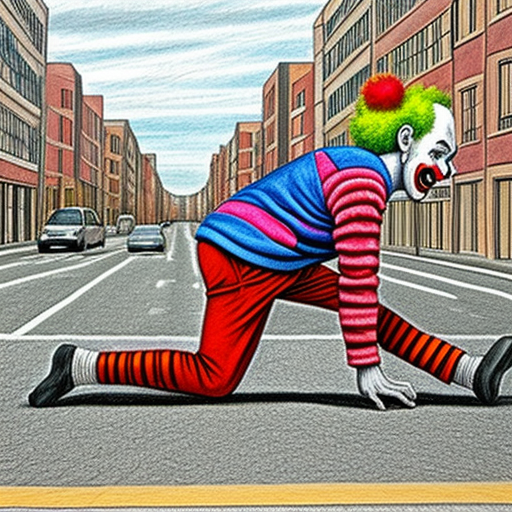

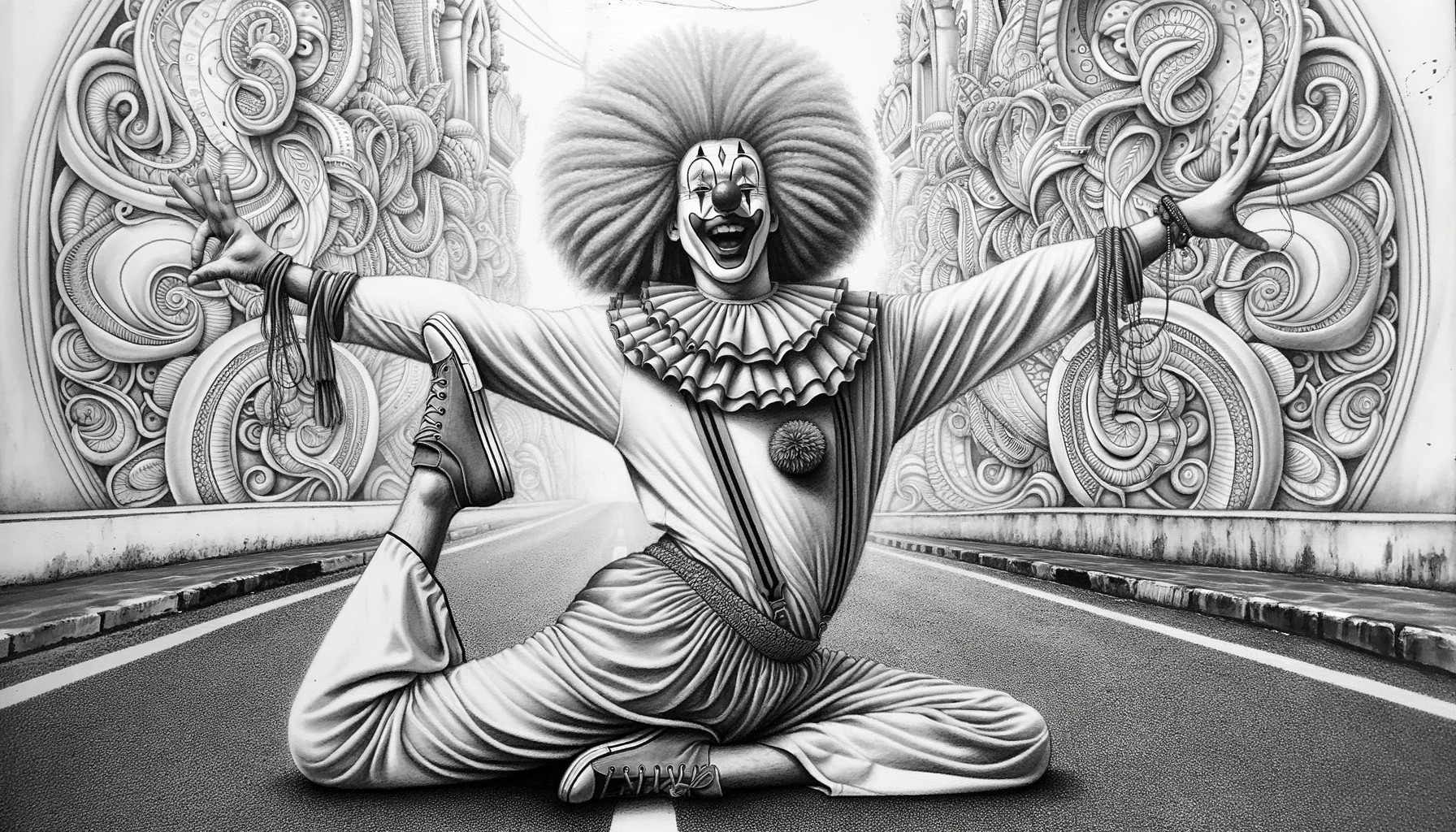

A clown doing yoga, no ControlNet needed

It’s been suggested that Stable Diffusion is a lot weaker than it might otherwise be due to the low quality of much of the LAION dataset it was trained on – many captions do not correspond to image content and many images are small, badly cropped or bad quality. With the advent of vision models, there’s a case to be made for using AI to recaption the whole dataset and training a new model on the result.6

That would be a good start, but I think it’s also likely that OpenAI is leveraging the same secret sauce present in ChatGPT to understand prompts. As far as they’re concerned, generating pictures is a side-effect of the true goal: creating a machine that understands us.

The Great Gatsby pixel art

The door into summer

A king seeks his robot’s counsel, circa 1200 AD

A neat logo

Zombies out for a jog

Not sure what this Pixar film’s about

-

Most of the time, at least. Diffusion models don’t actually understand English, it just feels like they do. ↩︎

-

These were generated with SD 1.5 models, as I find most of the current SDXL ControlNet models somewhat lacking. ↩︎

-

While the pose model captures the general configuration of human limbs, SD is not smart enough to know which limbs are which, or how many of each one a human should have. It gets especially confused with poses in which the person’s hands touch the floor – many discarded generations of the final image showed three-legged clowns. ↩︎

-

Here again, I’ve used SD 1.5 because of model compatibility. ↩︎

-

It’s possible that Bing Image Creator also does this under the hood. ↩︎

-

Update (2023-10-20): according to this paper, this is pretty much exactly how DALL-E 3 was built. ↩︎

David Yates.

David Yates.